XAI in Adversarial Environments

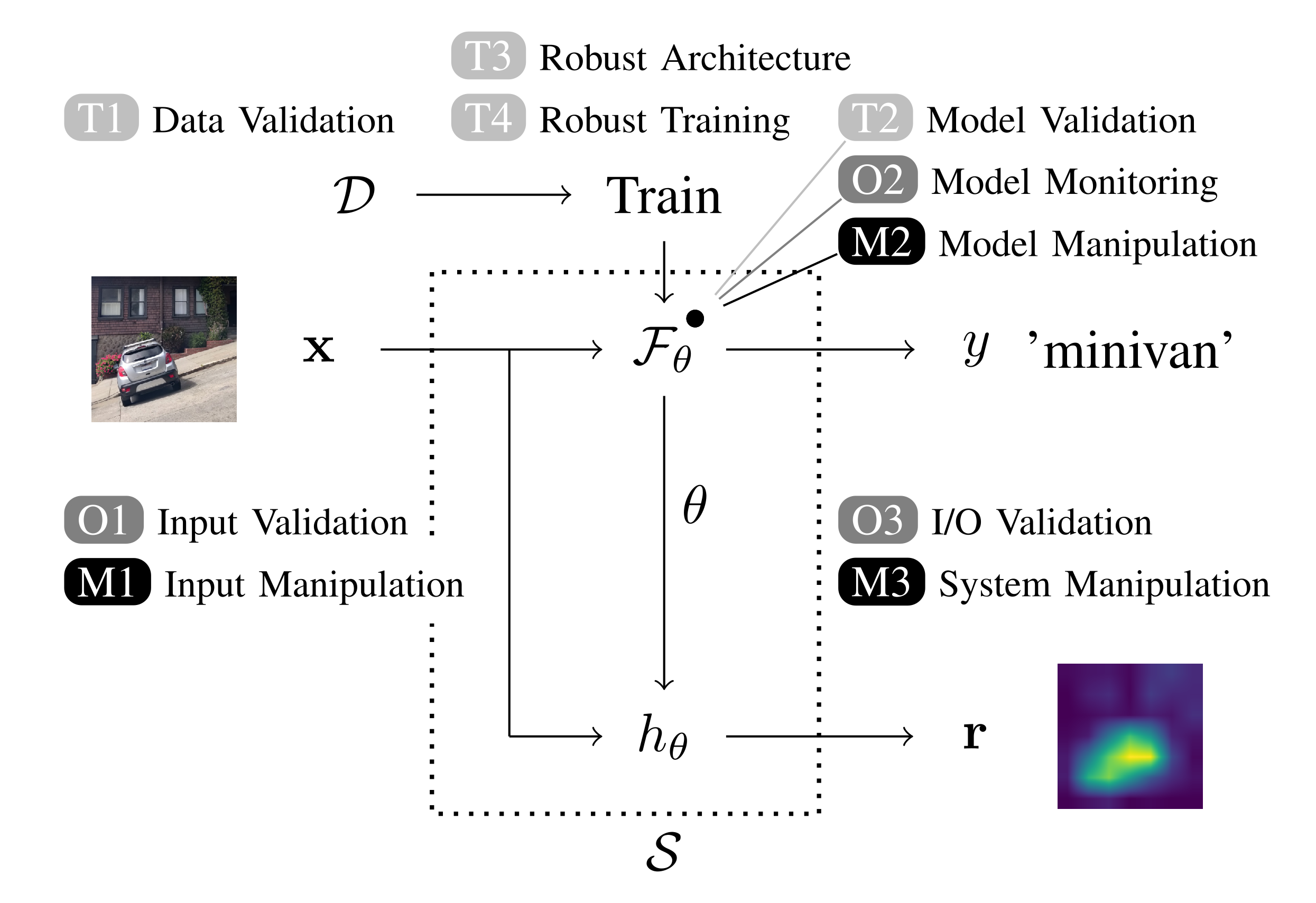

Modern deep learning methods have long been considered black boxes due to the lack of insights into their decision-making process. However, recent advances in explainable machine learning have turned the tables. Post-hoc explanation methods enable precise relevance attribution of input features for otherwise opaque models such as deep neural networks. This progression has raised expectations that these techniques can uncover attacks against learning-based systems such as adversarial examples or neural backdoors. Unfortunately, current methods are not robust against manipulations themselves.

- "SoK: Explainable Machine Learning in Adversarial Environments," IEEE S&P 2024

(paper, poster)

@InProceedings{Noppel2024SoK, author = {Maximilian Noppel and Christian Wressnegger}, booktitle = {Proc. of the 45th {IEEE} Symposium on Security and Privacy ({S\&P})}, title = {{SoK}: {E}xplainable Machine Learning in Adversarial Environments}, year = {2024}, month = may, day = {20.-23.}, } - "A Brief Systematization of Explanation-Aware Attacks," AI 2024

(paper)

@InProceedings{Noppel2024Brief, author = {Maximilian Noppel and Christian Wressnegger}, booktitle = {Proc. of 47th German Conference on Artificial Intelligence}, title = {A Brief Systematization of Explanation-Aware Attacks}, year = 2024, month = sep }